Measuring AI Agent Coverage

Using an autonomous testing Agent introduces a new challenge, understanding what it has tested and what not. This information is needed for analyzing the effectiveness of the agent and identifying which areas of the target software are covered.

Traditional test coverage metrics

Typically test coverage is measured by analyzing the code, requirements or test cases.

- Code coverage is typically calculated by analyzing the code and identifying which lines were executed during test runs. Tools instrument the code and keep track of code execution. Common code coverage metrics include branch coverage, statement coverage, function coverage, and line coverage.

- Test case coverage refers to how well test cases cover the system features. It is usually measured by mapping test cases to specific user stories using tracking tools.

- Requirement coverage measures which requirements are covered by test cases. Requirements can be functional of non-functional requirements.

Autonomous software agent that tests the target software through a user interface does not know about requirements, source code or other test cases. This creates a challenge where traditional code coverage measurements are not applicable for measuring the coverage.

UI coverage

Since the agent interacts with the target software through a user interface by emulating keyboard and mouse events. It means the agent can only test the areas of the software that are visible in the user interface.

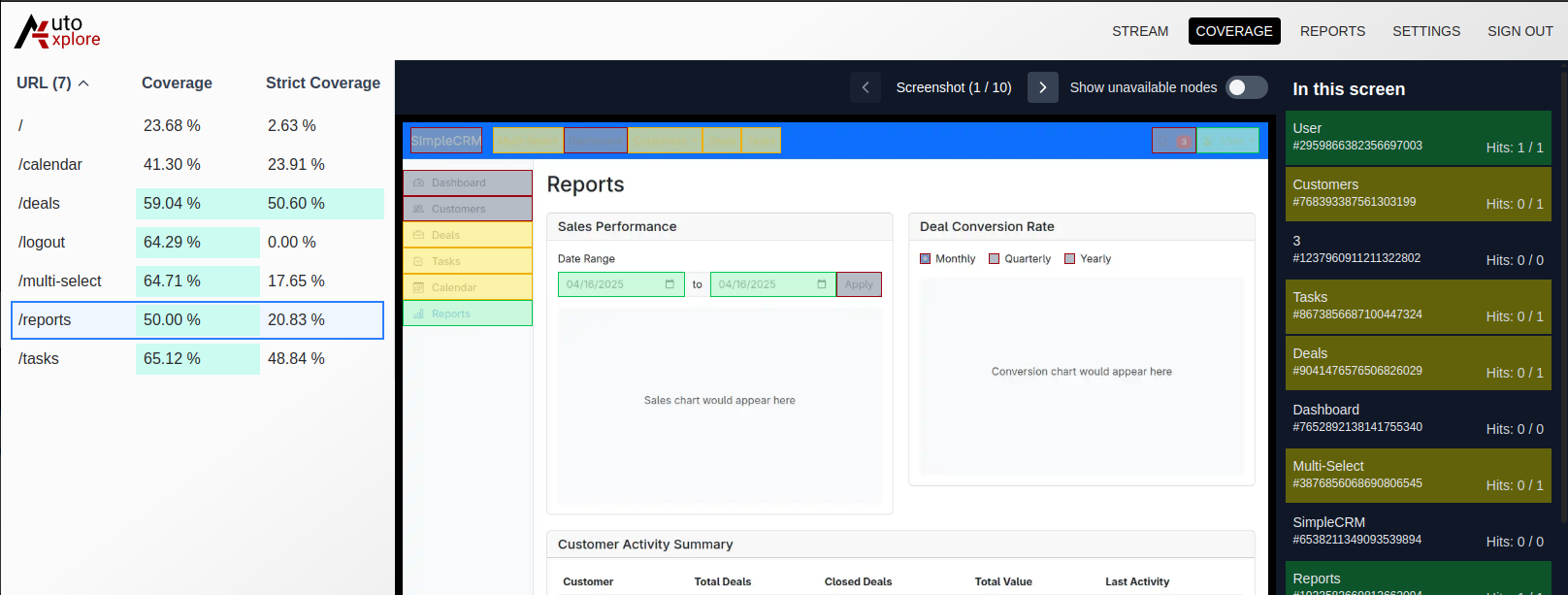

We used this gathered information as a basis for measuring the test coverage. All the elements detected by The Eye are presented on a screenshot from the user interface. Due to large number of elements AutoExplore coverage page now shows the elements categorized by URLs one screen at a time.

The elements are colored as follows:

- Green The element was interacted by the agent on the current page.

- Yellow The element was interacted by the agent on another page.

- Gray The element has not been interacted by the agent on any page.

Implementing visual coverage also provided us a way to verify Agent recognizes the elements correctly.

At AutoExplore, we are committed to helping R&D teams implement autonomous testing as part of their development processes. Ready to transform your process? Contact us for a demo to learn more.